Scaling applications in the cloud is a challenging yet crucial aspect of modern application deployment. This challenge often seems insurmountable for those entrenched in traditional, self-managed Kubernetes clusters. These environments demand a significant commitment to managing nodes, optimizing resource allocation, and maintaining high availability—a balancing act that can strain even the most experienced teams.

The Scaling Struggle with Traditional Kubernetes

In a traditional self-managed Kubernetes setup, scaling is often hindered by a few key challenges:

- Resource Management: Manually managing resources leads to underutilization (paying for more resources than needed) or overutilization (your applications suffer from insufficient resources).

- Operational Overhead: Maintaining cluster infrastructure, including upgrades and patches, requires significant time and effort and distracts development work.

- Complexity in Configuration: Implementing a scaling solution involves deep technical know-how and careful configuration, which can be a steep learning curve for many teams.

Embracing the AWS EKS Advantage

Enter Amazon Elastic Kubernetes Service (EKS), a managed service that revolutionizes how we approach Kubernetes scaling. EKS abstracts much of the complexity inherent in a self-managed cluster, integrating seamlessly with AWS native services. This integration offers a few distinct advantages:

- Reduced Operational Overhead: EKS manages the Kubernetes control plane, ensuring it runs efficiently, securely, and is up-to-date.

- Enhanced Security: AWS handles the security of the underlying infrastructure, providing peace of mind and allowing teams to focus on application development.

- Native AWS Integration: EKS integrates with various AWS services, allowing for smoother, more efficient operations and scaling.

Exploring Scaling Solutions in EKS: Nodegroups and Fargate

The Role of Managed Node Groups in EKS

AWS EKS Managed Node Groups simplify the management of EC2 instances serving as worker nodes in an EKS cluster. These node groups streamline various processes:

- Automated Management: Node Groups automate tasks such as patching, updating, and scaling, reducing manual intervention and operational complexity.

- Customizable Configurations: They allow custom configurations to tailor computing resources to applications’ specific needs.

- Integrated Monitoring: With native AWS integrations, monitoring and managing nodes’ health and performance becomes more straightforward.

Harnessing the Power of AWS Fargate in EKS

AWS Fargate presents a serverless approach to running containers, eliminating the need to manage servers or clusters:

- Serverless Compute Engine: Fargate allows applications to run in containers without managing underlying server infrastructure.

- Resource-Efficient Scaling: It scales resources on-demand, ensuring applications have the required computing power without over-provisioning.

- Streamlined Operations: Fargate simplifies operations, enabling teams to focus more on development and less on infrastructure management.

Comparative Analysis: Managed Node Groups vs Fargate

To better understand these two scaling solutions, let’s compare them side-by-side:

| Feature | Managed Node Groups | AWS Fargate |

| Infrastructure Management | Automated management of EC2 instances | Serverless, no infrastructure management required |

| Scaling Approach | Manual and Auto-scaling options available | Automatically scales computing resources |

| Resource Allocation | Customizable EC2 instances | Pay for actual usage of resources |

| Operational Complexity | Reduced, but requires some oversight | Minimal, focuses on application development |

| Cost Efficiency | Efficient for steady workloads | More cost-effective for spiky, unpredictable workloads |

| Control and Configuration | High degree of control over instances | Less control, more abstraction |

| Integration with AWS Services | Extensive, including CloudWatch and Auto Scaling | Seamless integration with other AWS services |

| Use Case Suitability | Suitable for steady, predictable workloads | Ideal for short-lived, sporadic, or unpredictable workloads |

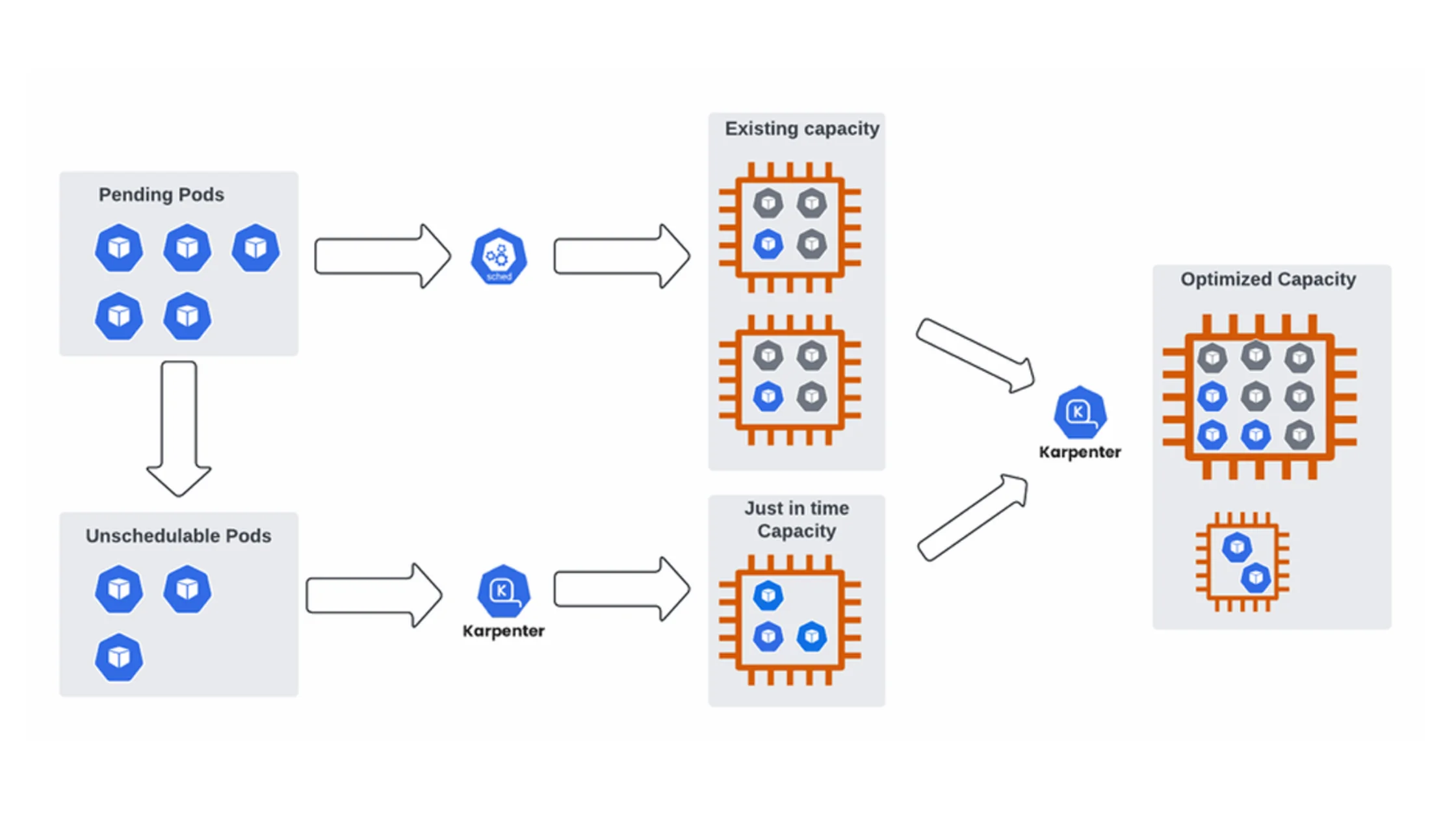

Karpenter: A Game Changer for EC2-based Scaling

Karpenter takes EC2-based node scaling to the next level. It’s an open-source auto-scaler designed for Kubernetes on AWS that optimizes compute resource provision based on application demands. What sets Karpenter apart is its ability to:

- Rapidly Launch Resources: Karpenter responds quickly to changes in workload demands, ensuring that your applications have the resources they need when needed.

- Efficiently Manage Resources: By analyzing usage patterns, Karpenter can provision the right type and amount of resources, minimizing waste and optimizing costs.

- Ease of Use: Karpenter is designed to be simple to set up and manage, reducing the operational complexity typically associated with scaling.

Karpenter can monitor the state of pods in a Kubernetes cluster and automatically adjust the capacity of worker nodes to meet demand. Thus, it optimizes resource utilization and ensures all pods are scheduled and running efficiently.

- Pending Pods: These pods have been created but are not scheduled to run on a node because the current cluster capacity doesn’t meet their resource requirements.

- Unscheduled Pods: These pods cannot be scheduled due to current capacity constraints. Perhaps all nodes are at full capacity or lack the required resources.

- 3. Existing Capacity: This represents the current nodes within the Kubernetes cluster that are running workloads. The cluster might be fully utilized or need the appropriate resource types or amounts for the pending/unscheduled pods.

- Just-in-Time Capacity: When Karpenter detects unscheduled pods, it assesses their resource requirements and provisions new nodes with the specific resources needed. It’s designed to make these decisions quickly and to provision nodes in a matter of seconds.

- Optimized Capacity: After Karpenter provisions the new nodes, the pending and unscheduled pods can be scheduled. This results in an optimized cluster that more accurately fits the workload demands, often improving cost efficiency and performance.

Karpenter improves upon the concept of Managed Node Groups in several ways:

- Proactive Resource Optimization: Karpenter dynamically provisions nodes that are closely tailored to the specific needs of pending pods. It doesn’t over-provision resources but instead aims to match precisely what is needed, which can lead to cost savings.

- Quick Scaling: Karpenter is designed to provision nodes rapidly, enabling it to respond quickly to changes in workload demands. This can be particularly useful in workloads that have sporadic or unpredictable spikes.

- Efficient Scaling Down: Karpenter doesn’t just scale up; it also intelligently scales down by terminating no longer needed nodes, minimizing waste.

- Flexibility: Karpenter can provision a wide variety of node types and sizes, allowing for a more flexible response to workload requirements than Node Groups, which are often homogeneous in instance type and size.

- Real-Time Evaluation: It continuously evaluates the environment and makes adjustments in real-time, which means it can handle changes in workload patterns more fluidly than the more static scaling methods used by Node Groups.

Setting the Stage for Advanced Pod Scaling

As we wrap up this exploration of scaling in AWS EKS, it’s crucial to note that this is just the tip of the iceberg. In the next installment of our series, we’ll delve deeper into the world of Kubernetes scaling by exploring Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA). These tools represent the next frontier in fine-tuning application scaling, ensuring your pods are always running at their optimal capacity. Stay tuned as we unfold the intricacies of pod-level scaling and how it seamlessly integrates into the EKS environment, pushing the boundaries of efficiency and flexibility.

Remember, mastering scaling in Kubernetes is a journey, not a destination. As you embark on this path with AWS EKS, you’re not just scaling your applications; you’re scaling your operational prowess and paving the way for a more resilient, efficient, and dynamic application environment.