Every scroll, click, and conversion today begins with one thing: a compelling visual. In a digital landscape where audiences make decisions in under 3 seconds, relying on traditional design timelines is no longer sustainable.

Recent statistics reveal that approximately 71% of images shared on social media platforms are now AI-generated, marking a decisive shift in how content is created and consumed.

Marketers, advertisers, and content creators increasingly use AI tools to save time and scale personalized, on-brand visuals without relying solely on regular design workflows.

AI makes it possible in minutes, whether a product mockup for an ad, a themed image for a seasonal campaign, or a quick visual for a trending topic.

In this blog, we’ll explore how to generate AI images from text, breaking down the tools, techniques, and tips you need to create stunning visuals that boost engagement across marketing, social media, and ads.

Understanding Text-to-Image AI: Turning Descriptions into Visuals

Text-to-Image AI refers to machine learning models that generate images based on textual descriptions. These models interpret natural language inputs and produce corresponding visual representations, bridging the gap between linguistic understanding and visual creation.

Text-to-image AI models help convert descriptive text into coherent images. They utilize advanced algorithms to understand the semantics of the input text and generate images that align with the described content. This technology enables users to create visuals without manual drawing or photography simply by providing a textual prompt.

This technology facilitates various applications, including content creation, design prototyping, and accessibility enhancements. It also democratizes image generation, allowing individuals without artistic skills to produce visual content efficiently.

Examples of Prompts and Outputs

1. Prompt: “A serene beach at sunset with palm trees and gentle waves.”

Generated Image: An illustration depicting a tranquil beach scene, showcasing the warm hues of a setting sun, silhouetted palm trees, and calm ocean waves lapping the shore.

2. Prompt: “A medieval castle atop a hill surrounded by dense forest.”

Generated Image: An image portraying a grand stone castle perched on a hill, encircled by lush green forests, evoking a historical and majestic ambiance.

Types of Deep Learning Models Behind AI Image Generation

Below is a breakdown of the different types of deep learning models that power AI image generation and how each contributes to the process.

1. Diffusion Models

Diffusion models generate images by starting with random noise and progressively refining it into coherent photos that align with a given text prompt. Here is how they work:

- Noise Addition: During training, images are gradually corrupted by adding noise in multiple steps.

- Learning the Reverse Process: The model learns to reverse this process, effectively denoising the image step by step to reconstruct the original image.

- Generation Phase: At inference, the model starts with random noise and applies the learned denoising steps to produce a new image corresponding to the input prompt.

Diffusion models are utilized in tools like Stable Diffusion and DALL·E 2 for generating high-quality images from textual descriptions.

2. Generative Adversarial Networks (GANs)

GANs consist of two neural networks, the Generator and the Discriminator, that are trained simultaneously through a competitive process. Here is how they work:

- Generator: Creates synthetic images from random noise.

- Discriminator: Evaluates images and distinguishes between real (from the training set) and fake (produced by the Generator).

- Adversarial Training: The Generator aims to produce images that can fool the Discriminator, while the Discriminator strives to improve its detection accuracy. This adversarial process continues until the Generator produces highly realistic images.

GANs are widely used for image synthesis, style transfer, and enhancing image resolution tasks.

3. Transformer Models

Transformer models are neural network architectures that excel at processing sequential data and capturing contextual relationships. In AI image generation, transformers understand and translate textual descriptions into visual representations. Here is how these models work:

- Text Encoding: The transformer processes the input text to generate a semantic representation that captures the meaning and context of the description.

- Image Generation: This semantic representation is then used to guide the generation of images that align with the described content.

Models like DALL·E 2 utilize transformer architectures to generate images from text prompts, demonstrating the capability to produce coherent and semantically aligned visuals.

How Are Text-to-Image AI Models Trained to Generate Images?

Below is an explanation of how text-to-image AI models are trained to understand language and generate matching visuals.

Training Methodologies

1. Paired Text-Image Datasets

Text-to-image models are trained on large datasets comprising images paired with descriptive text captions. These datasets enable models to learn the associations between textual elements and visual features, forming the foundation for generating images from new text inputs.

2. Contrastive Learning and CLIP Models

Contrastive Language-Image Pre-training (CLIP) models are trained to align textual and visual representations in a shared embedding space. CLIP enhances the model’s ability to generate images that accurately reflect the input text by learning to associate matching text-image pairs and distinguish non-matching ones.

Image Generation Pipeline

1. Prompt Encoding

The input text prompt is first tokenized and converted into a numerical representation that the model can process. This encoding captures the text’s semantic meaning, serving as the basis for image generation.

2. Image Synthesis

Using the encoded prompt, the model initiates the image generation process:

- Diffusion Models: Start with a noise-filled image and iteratively refine it through denoising steps guided by the prompt.

- Transformer-Based Models: Generate images by predicting pixel values or image patches corresponding to the textual description.

3. Sampling Methods and Refinement

Techniques like classifier-free guidance balance the fidelity to the text prompt with the realism of the generated image. These methods adjust the influence of the text on the image generation process, allowing for fine-tuning of the output’s adherence to the input description.

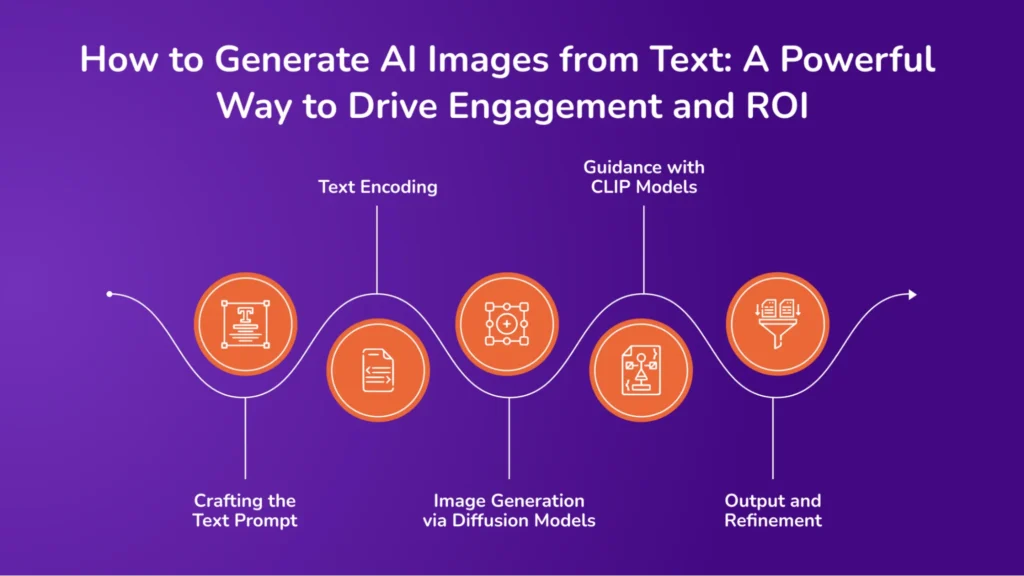

How to Generate AI Images from Text: A Powerful Way to Drive Engagement and ROI

Creating visuals no longer requires a camera or design software, just a well-written prompt. Below is the breakdown of the process on how to generate AI images from text, using advanced models that translate language into detailed visuals

1. Crafting the Text Prompt

Begin by formulating a clear, descriptive text prompt encapsulating the desired image. For instance, “A serene beach at sunset with palm trees and gentle waves.” The prompt’s specificity and clarity directly influence the generated image’s quality and relevance.

2. Text Encoding

The input text is processed using a language model to convert into a numerical representation called an embedding. This embedding captures the semantic meaning of the text and serves as a guide for the image generation process.

3. Image Generation via Diffusion Models

Diffusion models, such as Stable Diffusion, generate images by starting with random noise and iteratively refining it to align with the text embedding. This process involves multiple denoising steps, gradually constructing a coherent image that reflects the input prompt.

4. Guidance with CLIP Models

Models like CLIP (Contrastive Language–Image Pre-Training) ensure the generated image accurately represents the text prompt. CLIP evaluates the similarity between the text and the generated image, providing feedback that guides the diffusion model to produce more semantically aligned visuals.

5. Output and Refinement

After the iterative generation process, the final image is produced. Users can refine the image by adjusting parameters or rephrasing the prompt to achieve the desired outcome.

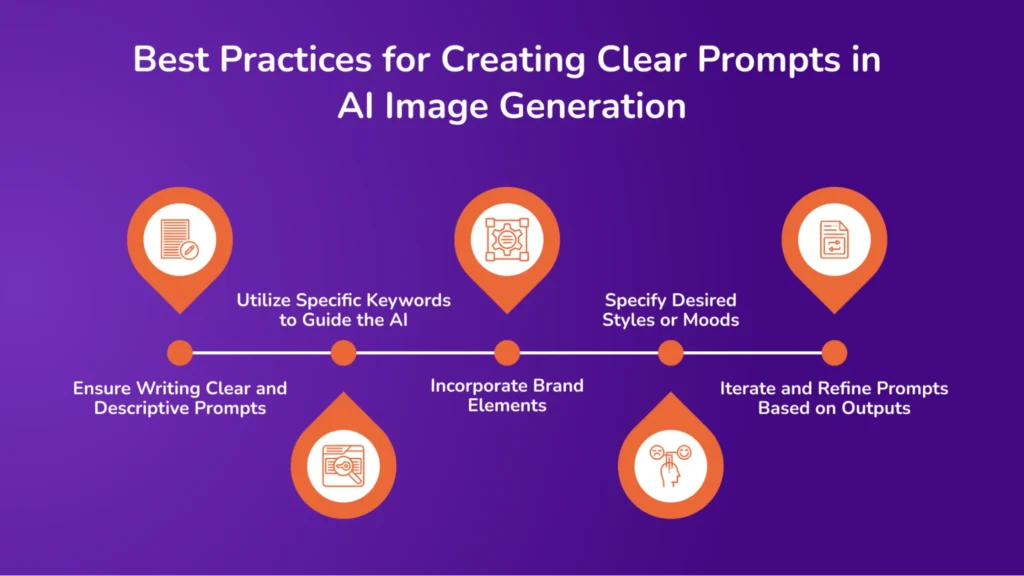

Best Practices for Creating Clear Prompts in AI Image Generation

Below are some best practices to help you write clear, effective prompts that guide AI models to generate accurate and visually relevant images.

1. Ensure Writing Clear and Descriptive Prompts

Crafting precise prompts is essential for guiding AI models to generate images that align with your vision. Ambiguous or vague prompts can lead to unpredictable or irrelevant outputs. By providing detailed descriptions, you help the AI understand the desired outcome more accurately.

2. Utilize Specific Keywords to Guide the AI

Include keywords that convey the subject, style, mood, and other relevant attributes. For example, instead of saying “a bird,” specify “a vibrant red cardinal perched on a snowy branch.” Such specificity enables the AI to generate more accurate and contextually appropriate images.

3. Incorporate Brand Elements

To maintain brand consistency, include elements such as brand colors, themes, or motifs in your prompts. For instance, if your brand uses a particular color palette, mention those colors explicitly to ensure the generated images align with your branding guidelines.

4. Specify Desired Styles or Moods

Clearly state the intended style or mood to guide the AI’s creative direction. Terms like “minimalist,” “vintage,” “surreal”, or “cheerful” help the AI understand the aesthetic or emotional tone you’re aiming for, resulting in images that better match your objectives.

5. Iterate and Refine Prompts Based on Outputs

AI-generated images may not always meet expectations on the first attempt. Review the outputs and adjust your prompts accordingly. This iterative process allows you to refine the prompts to achieve results that align with your vision.

Limitations of AI-Generated Images

While generating images from text has become more accessible with AI, certain limitations and challenges remain.

1. Potential Inaccuracies or Lack of Context

AI-generated images are produced based on patterns learned from vast datasets. However, these models may not fully grasp nuanced contexts, leading to outputs that are inaccurate or misaligned with the intended message. For instance, an AI might generate an image that misrepresents cultural symbols or fails to capture the emotional tone desired for a campaign.

2. Difficulty in Generating Complex Scenes or Specific Brand Elements

While AI can create impressive visuals, it often struggles with intricate scenes or incorporating specific brand elements such as logos, proprietary products, or unique design aesthetics. This limitation requires additional manual editing to ensure brand consistency and representation accuracy.

3. Understanding the Rights Associated with AI-Generated Content

The legal status of AI-generated images is complex and evolving. In many jurisdictions, including the United States, works created solely by AI without significant human input are not eligible for copyright protection. This ambiguity can lead to challenges asserting ownership or preventing unauthorized use of such images.

AI models are often trained on large datasets that may include copyrighted images without explicit permission from the rights holders. This practice has led to legal disputes, as seen in cases like Getty Images suing Stability AI for allegedly using its images without authorization. Such legal challenges highlight the importance of understanding the provenance of AI-generated content and ensuring compliance with intellectual property laws.

4. Balancing AI and Human Creativity

While AI can expedite content creation, human oversight remains crucial to ensure that the outputs align with brand values and messaging. Human input is essential for interpreting context, making creative judgments, and refining AI-generated content to meet specific objectives.

5. Maintaining Authenticity and Trust

Overreliance on AI-generated images can lead to a loss of authenticity, potentially eroding consumer trust. Balancing AI-generated content with genuinely human-created visuals is essential to maintaining a brand’s credibility and emotional connection with its audience.

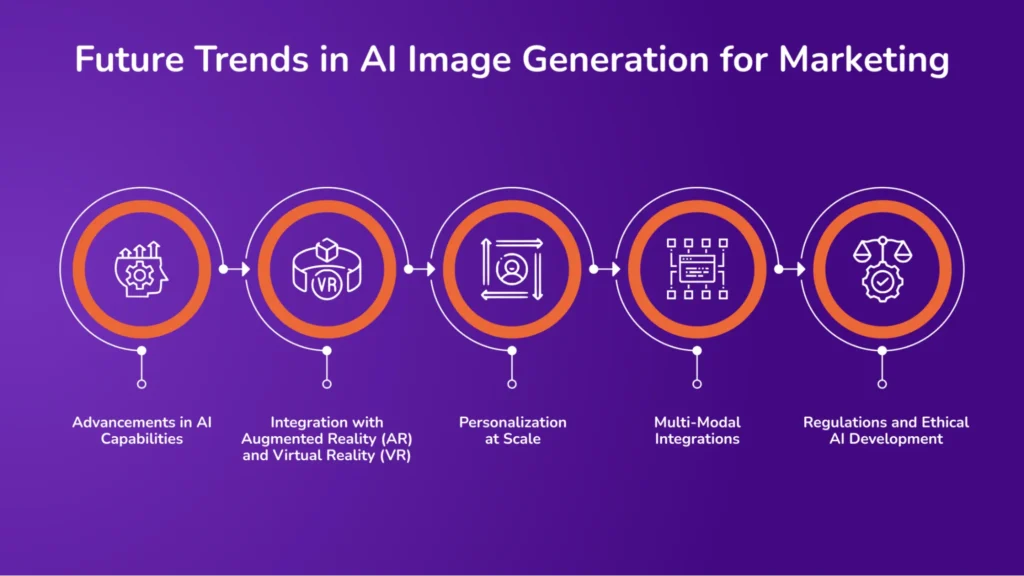

Future Trends in AI Image Generation for Marketing

As AI technology continues to evolve, it holds immense potential to transform how we create, interact with, and personalize visual content in the future. Here is how AI will make its impact:

1. Advancements in AI Capabilities

AI image generation is rapidly evolving, offering more realistic and customizable visuals. Modern models can produce high-fidelity images that closely mimic real-life textures and lighting. This progress allows marketers to create visuals that align more closely with brand aesthetics and campaign goals, enhancing the overall impact of marketing materials.

2. Integration with Augmented Reality (AR) and Virtual Reality (VR)

Combining AI-generated images with AR and VR technologies opens new avenues for immersive marketing experiences. For instance, AI can generate 3D models or environments that users can interact with in real-time, providing a more engaging way to showcase products or services. This integration enhances user engagement and can lead to higher conversion rates.

3. Personalization at Scale

AI enables the creation of personalized visuals tailored to individual user preferences, behaviors, or demographics. AI can generate images that resonate more effectively with specific audience segments by analyzing user data, improving relevance and engagement in marketing campaigns.

4. Multi-Modal Integrations

Future AI systems are expected to handle multiple data types simultaneously, such as text, images, audio, and video. This capability allows for creating cohesive marketing content that combines various media forms, providing a more comprehensive and engaging user experience.

5. Regulations and Ethical AI Development

As AI-generated content becomes more prevalent, there is a growing emphasis on establishing ethical guidelines and regulatory frameworks. These measures aim to address copyright, data privacy, and the potential misuse of AI-generated content, ensuring responsible development and deployment of AI technologies in marketing.

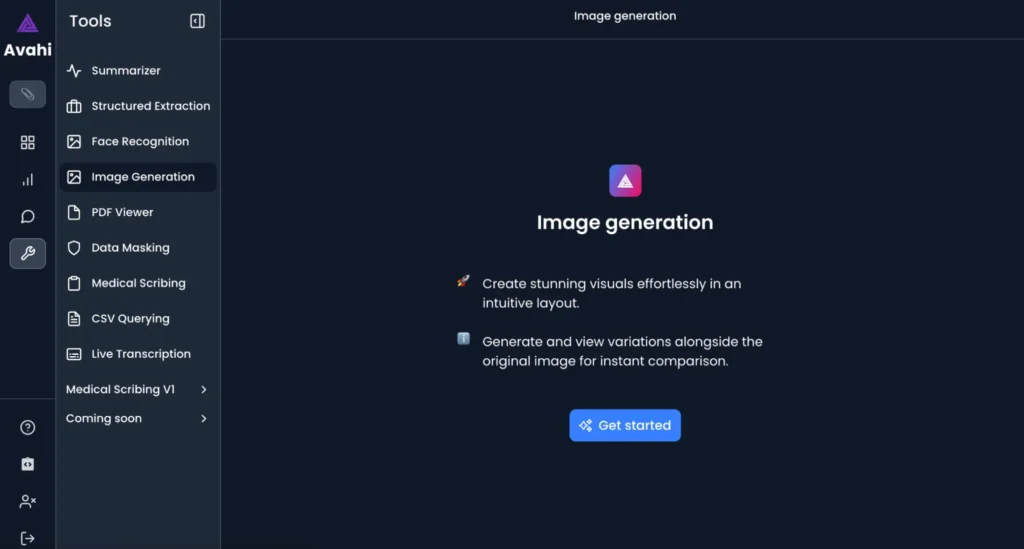

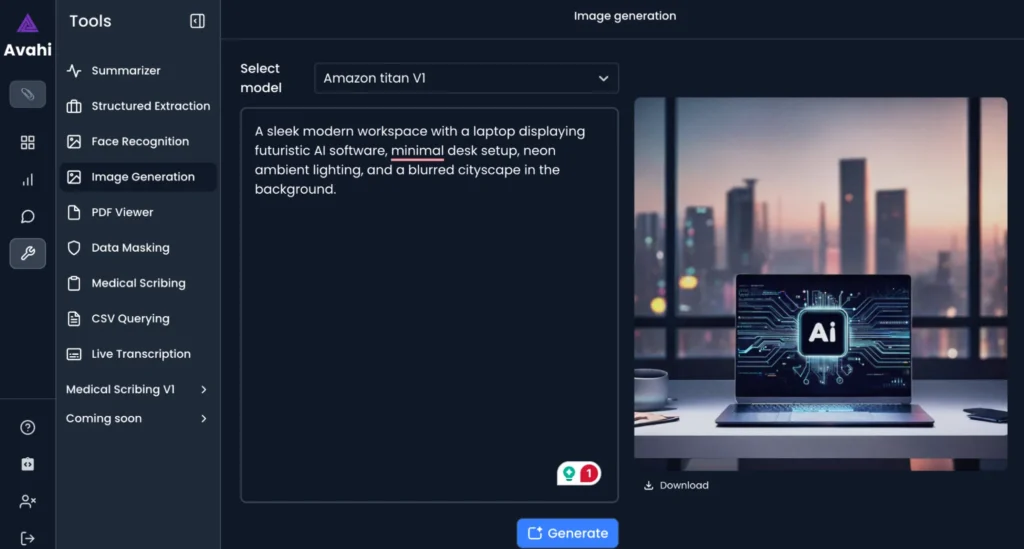

Avahi AI’s Image Generation: Turning Prompts into Visual Content with Precision

Avahi AI platform offers a powerful image generation feature to help users create high-quality visuals by describing what they want. This capability is integrated within the Avahi AI platform, allowing marketers, designers, and businesses to streamline content creation by converting plain text into detailed images using advanced AI models. Here is how it works:

1. Prompt Input

Users begin by typing a clear and descriptive text prompt into the image generation interface within the Avahi AI platform.

2. AI Processing

The system interprets the prompt using sophisticated machine learning models that understand language and translate it into visual elements.

3. Image Generation

Based on the input, the AI creates a corresponding image that reflects the content, style, and structure described in the prompt.

4. Output & Download

Once the image is generated, users can preview, refine (if needed), and download the final image for use in presentations, marketing materials, social media, or product design.

Benefits of Avahi AI’s Image Generation for Businesses and Creators

Avahi AI’s text-to-image feature offers many advantages for professionals who rely on visual content. It enables fast, cost-effective, and scalable image creation.

Faster Content Creation

Users can generate images within minutes instead of spending hours on manual design or waiting for external creative support. This is especially valuable for campaigns that need rapid turnarounds or frequent visual updates.

Creative Flexibility

Users can explore various styles and subjects, from product mockups to social media graphics and conceptual visuals. Whether you need realistic, abstract, minimal, or thematic imagery, the AI adapts to your prompt to produce the desired visual outcome.

No Design Skills Required

The image generation tool is designed to be user-friendly. Anyone with no graphic design experience can create professional-quality images by describing what they need.

Consistent Branding

Users can include brand-specific elements in their prompts, such as preferred color schemes, themes, or design directions, ensuring that the generated images align with brand identity and visual standards.

Avahi AI’s image generation feature supports various industries, helping marketing teams create ad creatives and blog visuals, e-commerce brands design product concepts, educators develop explainer content, and startups rapidly prototype ideas. It enables users to transform ideas into high-quality images within seconds, streamlining content creation with speed, ease, and creative control.

Avahi AI’s image generation feature brings speed, creativity, and efficiency to visual content development—empowering users to go from idea to image in seconds.

Discover Avahi’s AI Platform in Action

At Avahi, we empower businesses to deploy advanced Generative AI that streamlines operations, enhances decision-making, and accelerates innovation—all with zero complexity.

As your trusted AWS Cloud Consulting Partner, we empower organizations to harness AI’s full potential while ensuring security, scalability, and compliance with industry-leading cloud solutions.

Our AI Solutions include

- AI Adoption & Integration – Utilize Amazon Bedrock and GenAI to enhance automation and decision-making.

- Custom AI Development – Build intelligent applications tailored to your business needs.

- AI Model Optimization – Seamlessly switch between AI models with automated cost, accuracy, and performance comparisons.

- AI Automation – Automate repetitive tasks and free up time for strategic growth.

- Advanced Security & AI Governance – Ensure compliance, fraud detection, and secure model deployment.

Want to unlock the power of AI with enterprise-grade security and efficiency? Get Started with Avahi’s AI Platform!

Frequently Asked Questions(FAQs)

1. What is text-to-image AI, and how does it work?

Text-to-image AI is a technology that generates images based on written descriptions. It uses machine learning models like diffusion, GANs, or transformers to interpret the text and create visuals that match the input prompt. Simply put, you describe an image in words, and the AI turns it into a picture.

2. How can I write effective prompts for AI image generation?

Start with a clear, specific description of what you want. Mention elements like subject, color, style, setting, or emotion. For example, instead of “a cat,” say “a fluffy white cat sleeping on a sunny windowsill in a cozy living room.” The more detailed the prompt, the better the results.

3. Can I use AI-generated images for commercial purposes?

It depends on the tool and the licensing terms. Some platforms allow full commercial use, while others require attribution or restrict specific uses. Always review the licensing agreement before using AI-generated images in ads, branding, or resale products.

4. Are there limitations to using AI-generated images?

Yes. AI might misinterpret vague prompts, struggle with complex scenes, or fail to include exact brand elements like logos. It may also generate content that lacks emotional or cultural context. Human review and editing are often needed to refine results.

4. Is AI-generated image creation ethical and safe?

Ethical use depends on transparency, originality, and proper data sourcing. Some models are trained on copyrighted data, which raises concerns. Marketers should choose reputable tools, avoid using AI to mimic real people or mislead consumers, and always credit sources where required.