Imagine scrolling through your favorite social media app. You upload a group photo, and names appear over the faces within seconds. This is powered by AI facial recognition, and it’s not just identifying your friends; it’s learning more about your face than you realize.

Facial recognition technology is no longer science fiction. It’s embedded in everyday experiences, from unlocking your phone to automatically tagging photos, to personalized filters that track every micro-expression.

The global facial recognition market is expected to reach $24.28 billion by 2032, growing at a staggering 15.5% CAGR from 2025 (Fortune Business Insights).

But with great convenience comes deeper concerns.

In 2025, Google agreed to pay $1.375 billion to the state of Texas over claims that it had collected biometric data, such as facial geometry, without users’ consent.

These incidents are signs of a larger pattern in which your face, a uniquely personal identifier, is being captured, stored, and sometimes exploited in ways you may never have agreed to.

At the same time, facial recognition is becoming more intelligent and pervasive. Powered by AI models, it can identify people even in poor lighting, with partial visibility, or across time as faces age. So, is your face private anymore?

This blog provides a clear breakdown of how AI face recognition technology works, explores the widespread use of facial recognition across social media platforms, and offers practical insights on how individuals can protect their digital identity in an increasingly AI-driven world.

What is AI Face Recognition & How Does It Work

AI face recognition technology uses artificial intelligence to identify or verify individuals by analyzing their facial features.

It captures a person’s face from a photo or video, processes it with machine learning algorithms, and compares it to a stored image or database. Due to its speed, accuracy, and contactless nature, this technology is widely used in security, authentication, and social media platforms.

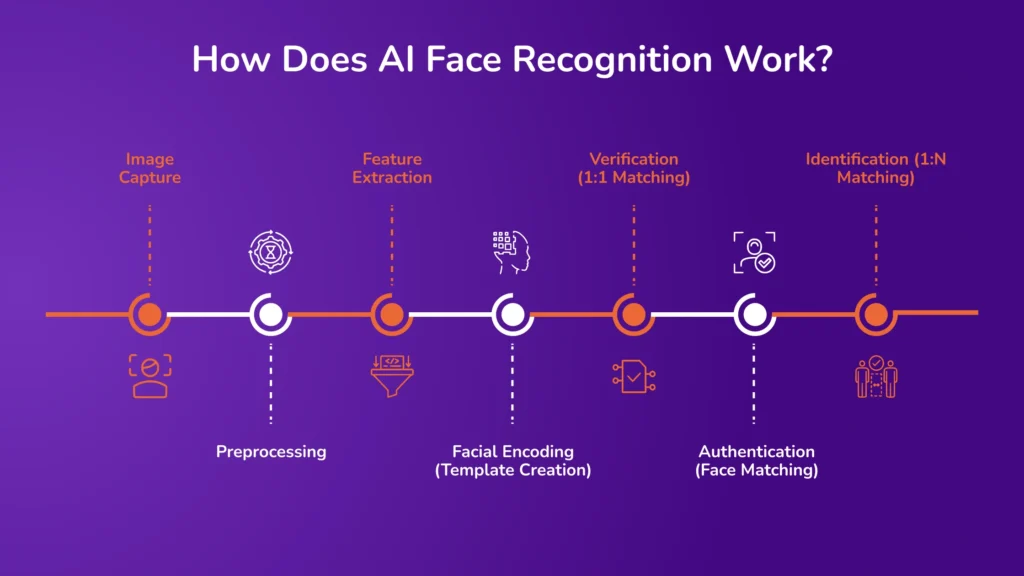

How Does AI Face Recognition Work?

AI face recognition is a multi-step process using machine learning and computer vision to accurately identify or verify a person based on facial features. Here’s an explanation of how the technology works:

1. Image Capture

The process begins with capturing an image or video that contains one or more human faces. This is usually done using cameras on smartphones, laptops, or surveillance systems.

The system recognizes faces in various conditions, including angles, lighting, backgrounds, and distances. It can detect multiple faces within a single frame in more advanced use cases, such as group photos or crowded public spaces.

2. Preprocessing

Once a face is captured, the image undergoes preprocessing to optimize it for analysis. This step includes adjusting brightness and contrast to improve visibility, reducing noise and enhancing sharpness for more precise facial details, and detecting the face within the frame using algorithms to isolate it from the background and other objects. Preprocessing ensures the system works effectively even with imperfect image quality.

3. Feature Extraction

Next, the system identifies and analyzes key facial features. These features include the shape and position of the eyes, eyebrows, nose, lips, cheekbones, and jawline, spatial relationships between these points, such as the distance between the eyes or the angle of the jaw.

AI algorithms powered by deep learning analyze these patterns to create a detailed map of the face. Over time, these models learn to detect subtle differences across individuals, improving accuracy even when faces age or undergo changes.

4. Facial Encoding (Template Creation)

Once the facial features are extracted, the system converts this information into a faceprint, a unique mathematical representation of the face.

This encoding is a set of numerical vectors that summarize the facial structure. It acts like a digital fingerprint, allowing the system to compare and store identities efficiently. This encoded template is stored securely and used in future comparisons.

5. Verification (1:1 Matching)

The verification process is the first step in a facial recognition system when a person registers. A live image is captured and compared with a specific image, such as a photo on a government-issued ID.

This 1:1 comparison confirms that the person is who they claim to be. Once verified, the person’s faceprint is stored in the system for future authentication.

6. Authentication (Face Matching)

After the initial verification, the system uses authentication to verify the person’s identity in future interactions.

A live face image is captured and compared to the previously stored faceprint. If the match is successful, access is granted (e.g., unlocking a phone, logging into an app, or entering a secure facility). This enables fast and secure access control without passwords or cards.

7. Identification (1:N Matching)

In identification mode, the system matches a face against an extensive database of stored faceprints. This is a 1:N comparison, where the system determines who the person is among many registered individuals. Every day use cases include surveillance, law enforcement investigations, and airport security.

Unlike verification, which confirms a claimed identity, identification seeks to discover an identity from a pool of possibilities.

Such models continue to evolve with larger datasets and improved learning techniques, driving the growing use of facial recognition in both commercial and governmental applications.

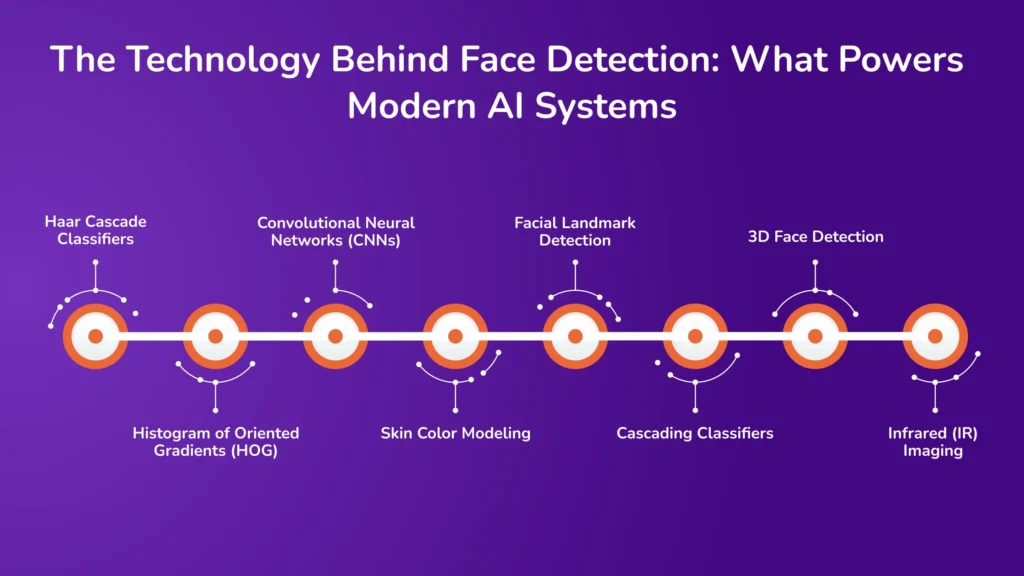

The Technology Behind Face Detection: What Powers Modern AI Systems

Face detection is a fundamental task in computer vision that involves identifying and locating human faces within digital images or video frames. Below is an overview of the primary technologies employed in face detection:

1. Haar Cascade Classifiers

Introduced by Viola and Jones in 2001, Haar Cascade Classifiers are among the earliest and most influential methods for real-time face detection. They utilize Haar-like features, simple rectangular patterns that capture the contrast between adjacent regions of an image, such as the area around the eyes and cheeks.

These features are organized into a cascade of classifiers, allowing the system to quickly discard non-face regions and focus computational resources on promising areas. This efficient method was widely adopted in early face detection applications, including those implemented in OpenCV.

2. Histogram of Oriented Gradients (HOG)

HOG is a feature descriptor that captures the distribution of gradient orientations in localized portions of an image. By analyzing the direction and intensity of edges, HOG effectively represents the structural characteristics of objects, including human faces.

When combined with classifiers like support vector machines (SVM), HOG can accurately detect faces. This is beneficial in scenarios where computational efficiency is essential.

3. Convolutional Neural Networks (CNNs)

CNNs have revolutionized face detection by enabling systems to learn hierarchical representations of facial features directly from data. Faster R-CNN, YOLO (You Only Look Once), and SSD (Single Shot MultiBox Detector) have been adapted for face detection tasks.

These deep learning models can handle pose, lighting, and occlusion variations, achieving high accuracy in complex environments.

4. Skin Color Modeling

Skin color modeling involves detecting regions in an image that match the color characteristics of human skin.

Color spaces such as HSV (Hue, Saturation, Value), YCbCr (Luminance, Chrominance), and TSL (Tint, Saturation, Lightness) are often used to enhance the robustness of skin detection under varying lighting conditions. By identifying skin-colored regions, the system can narrow down potential face locations, improving the efficiency of subsequent detection steps.

5. Facial Landmark Detection

Facial landmark detection identifies key points on the face, such as the eyes’ corners, the nose’s tip, and the mouth’s edges. These landmarks are crucial for face alignment, expression analysis, and pose estimation.

Techniques for landmark detection often leverage machine learning models trained on annotated facial datasets, enabling precise localization of facial features even under challenging conditions.

6. Cascading Classifiers

Cascading classifiers are a series of progressively more complex classifiers applied sequentially to an image region. Early stages quickly eliminate non-face regions using simple features, while later stages apply more detailed analysis to confirm the presence of a face.

This hierarchical strategy balances detection accuracy with computational efficiency, making it suitable for real-time applications.

7. 3D Face Detection

3D face detection incorporates depth information to model the three-dimensional structure of the face. This method enhances robustness to variations in pose and lighting, as it captures geometric details that 2D methods might miss.

Technologies like structured light and time-of-flight sensors are employed to acquire depth data, enabling more accurate and reliable face detection in diverse scenarios.

8. Infrared (IR) Imaging

Infrared imaging captures thermal or near-infrared light emitted or reflected by objects, providing an alternative modality for face detection.

IR-based systems are less sensitive to ambient lighting conditions, making them effective in low-light or nighttime environments. Additionally, IR imaging can aid in detecting liveness, as it captures heat patterns associated with living tissue, helping to prevent spoofing attacks using photographs or masks.

How Facial Recognition Works on Social Media Platforms

Facial recognition technology on social media relies on user interactions, artificial intelligence algorithms, and supplementary data to identify individuals in photos and videos. Below is a breakdown of how this process works:

1. Data Collection

Social media platforms build facial data by collecting images and videos that users upload. The system scans the image for faces every time someone uploads a photo. Users often tag themselves or others, which further helps the platform associate names with facial features.

Over time, this manual tagging allows the platform to improve its automatic recognition capabilities. Platforms also use auto-suggestions to prompt users to tag others based on similarities with previously tagged images, making the tagging process faster and more automated.

2. Feature Analysis

AI algorithms extract key facial features once a face is detected in an image. This includes analyzing the distances between the eyes, nose, mouth, jawline, and other identifiable points. These measurements are converted into a unique numerical representation called a “faceprint.”

Think of it as a digital fingerprint for your face. The system stores these faceprints and compares them with existing data whenever a new image is uploaded. The system suggests or automatically applies a tag if the features closely match a previously identified faceprint.

For example, Facebook (Meta) developed DeepFace, an AI model that accurately identifies faces. It scanned images to detect and recognize people for tagging suggestions. Although Facebook paused this feature due to privacy concerns, the underlying technology set a precedent for others.

3. Metadata Usage

Platforms collect metadata from uploaded media in addition to facial features to enhance recognition accuracy. Metadata includes geolocation (where the photo was taken), timestamps (when it was taken), device identifiers (what phone or camera was used), and even image orientation or light settings.

This metadata helps AI systems make more informed guesses when combined with facial data. For example, suppose a person is frequently tagged in photos taken at a specific location or with a particular group. In that case, the system can use that context to improve recognition predictions.

Risks and Ethical Challenges of Facial Recognition Technology

Despite its rapid adoption and technological advancements, facial recognition still faces significant risks and ethical challenges that cannot be overlooked. Below is the list of a few of them:

1. Data Collection Without Consent

Facial recognition systems often collect images without individuals’ explicit consent. For instance, Clearview AI amassed a database of over 30 billion facial images by scraping photos from social media and other online platforms without user permission. This practice led to significant fines, including a $33 million penalty from the Dutch Data Protection Authority for violating privacy laws.

2. Potential for Misuse

The unauthorized use of facial data poses risks such as surveillance, stalking, and identity theft. In the UK, reports have highlighted that police forces unlawfully retained custody images of individuals who were never charged, using them for facial recognition searches. Such practices raise concerns about the potential misuse of biometric data by authorities and other entities.

3. Bias and Discrimination

Studies have shown that facial recognition systems can exhibit biases against certain racial and gender groups. Research from the MIT Media Lab found that facial analysis software had error rates of up to 35% for darker-skinned women, compared to less than 1% for lighter-skinned men. These disparities can lead to misidentification and unequal treatment in various applications, including law enforcement and employment.

4. Lack of Regulation

The rapid advancement of facial recognition technology has outpaced existing privacy laws and regulations. In many jurisdictions, there is a lack of comprehensive legal frameworks to govern the collection, use, and storage of biometric data.

For example, a report by the National Academies highlighted the need for updated laws to address privacy, equity, and civil liberties concerns associated with facial recognition technology.

Strategies for Preserving Your Facial Privacy on Social Platforms

While complete anonymity on social media is difficult, users can take specific steps to reduce how often and accurately their faces are recognized. Here is a list of some of the best practices you can take to protect your privacy:

1. Adjust Privacy Settings to Control Tagging and Recognition

Most major social platforms allow users to manage who can tag them and whether facial recognition suggestions are used. Disabling auto-tagging features prevents the platform from automatically identifying and suggesting your name in photos.

Additionally, users can limit who can tag them in images or review tags before they appear publicly, which reduces unwanted visibility.

2. Enhance Privacy with Browser and Mobile Tools

Browser extensions and mobile tools designed to protect facial privacy exist. Face blur tools can automatically obscure faces in uploaded photos, helping maintain anonymity.

Metadata scrubbers remove embedded data, such as GPS coordinates and device details, from image files before posting, limiting the contextual clues AI can use during analysis.

3. Minimize Digital Footprint

One effective preventive measure is to avoid uploading images clearly showing your face, especially in high-resolution or frontal views.

Educating friends and family about tagging policies and responsible posting helps reduce your exposure in group photos or shared content where you may be present but did not post the image yourself.

Future of Facial Recognition on Social Media

Facial recognition on social media is evolving rapidly, and this progress raises opportunities and concerns, making responsible development and transparency essential. Here’s how it is transforming social media platforms today:

1. Integration with AR/VR Platforms

Facial recognition is expected to play a central role in the growing use of Augmented Reality (AR) and Virtual Reality (VR) within social ecosystems.

Platforms like Meta’s Horizon Worlds and Snapchat’s AR filters already rely on facial tracking to enable lifelike avatars, facial expressions, and interactive content. Future applications could include real-time facial emotion detection in virtual meetings or customizing digital experiences based on user reactions.

2. Real-Time Tracking and Behavior Prediction

AI models can identify faces, track them in real time across video feeds, and predict behavior. For instance, TikTok’s algorithm interprets facial engagement to optimize feed recommendations, such as whether users smile or linger longer on specific content.

Shortly, social platforms could analyze micro-expressions, gaze patterns, or facial tension to anticipate user intent or mood, which may influence content delivery or ad targeting.

3. Voice Recognition

Voice biometrics are increasingly being used as a supplementary or alternative identification method. Platforms integrating audio content like Clubhouse or Instagram Voice Notes may use voice recognition to verify users or personalize experiences.

Voice data offers a contactless form of identification but comes with its own set of privacy implications.

4. Gait Analysis

Gait recognition technology analyzes how a person walks to identify them. While this is more common in surveillance and security settings, it may become popular on social media for avatar personalization or movement-based interaction in VR environments.

For example, companies developing full-body motion tracking for platforms like Meta Quest are exploring gait-based identity markers for realistic avatar representation.

Avahi AI’s Facial Recognition: Streamlining Identity Verification

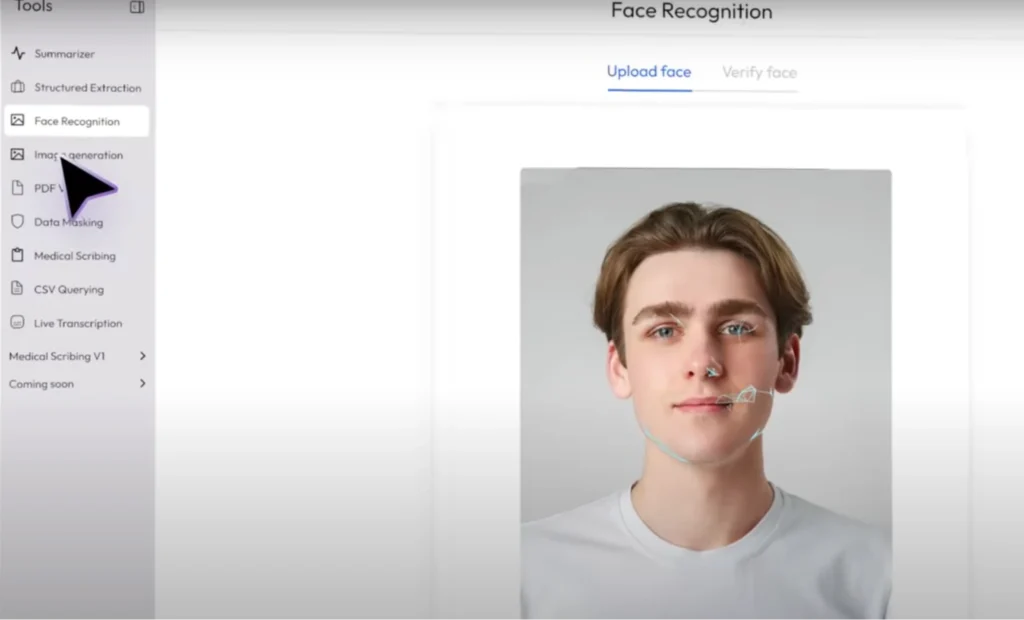

Avahi AI platform offers a facial recognition feature to enhance identity verification processes. By integrating advanced biometric authentication into social media ecosystems, platforms can offer users a safer, more streamlined, and trustworthy experience.

How It Works

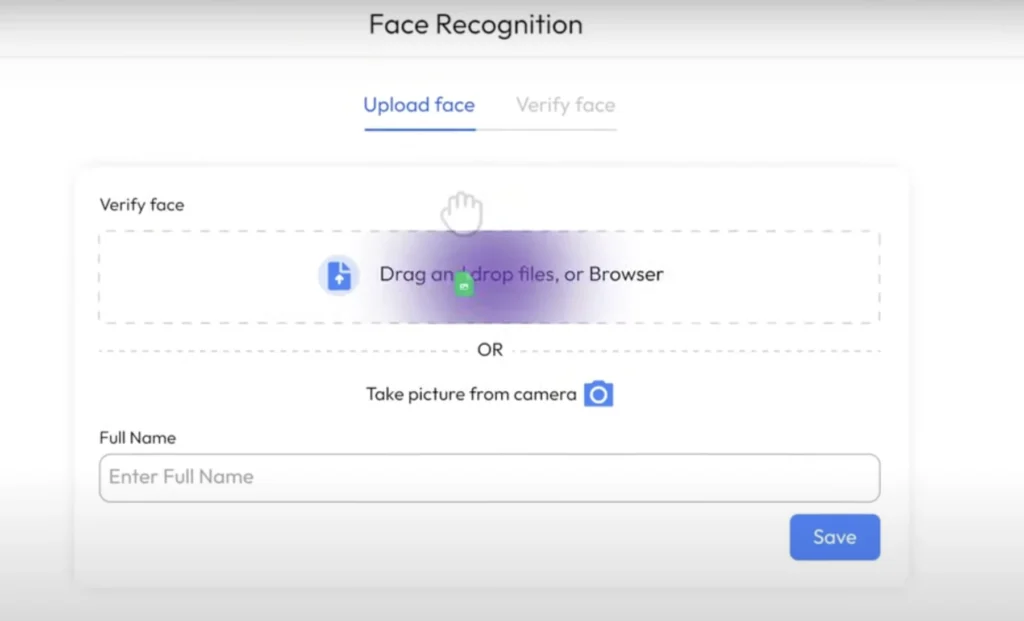

1. Image Upload: Users upload a clear image of their face through the platform’s interface.

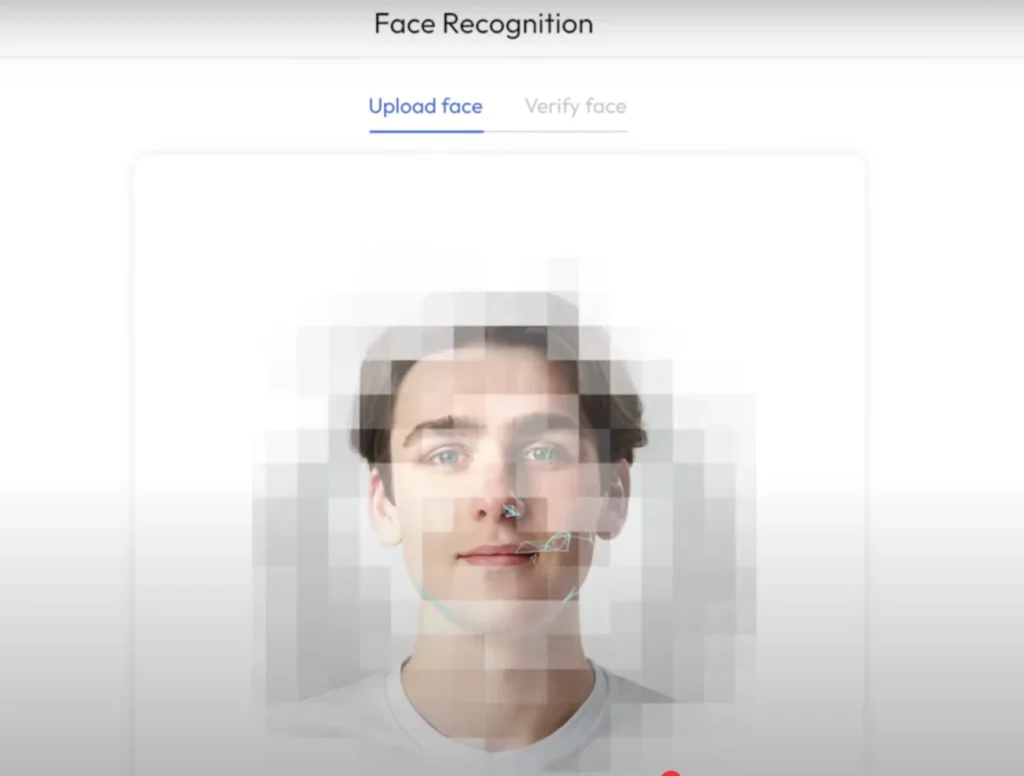

2. Facial Analysis: The system analyzes unique facial features using advanced algorithms.

3. Identity Matching: The analyzed data is compared against official records or documents to verify the user’s identity.

4. Result Delivery: The system provides a verification result, confirming whether the identity matches the provided information

Integrating Avahi AI’s facial recognition offers a scalable and user-friendly solution as social media platforms look to increase security, authenticity, and regulatory compliance.

From eliminating fake accounts to enhancing user trust, biometric verification brings a new standard of accountability and convenience to the digital social space. Using facial recognition responsibly, platforms can create a more secure and credible environment for their global user base.

Discover Avahi’s AI Platform in Action

At Avahi, we empower businesses to deploy advanced Generative AI that streamlines operations, enhances decision-making, and accelerates innovation—all with zero complexity.

As your trusted AWS Cloud Consulting Partner, we empower organizations to harness AI’s full potential while ensuring security, scalability, and compliance with industry-leading cloud solutions.

Our AI Solutions include

- AI Adoption & Integration – Utilize Amazon Bedrock and GenAI to enhance automation and decision-making.

- Custom AI Development – Build intelligent applications tailored to your business needs.

- AI Model Optimization – Seamlessly switch between AI models with automated cost, accuracy, and performance comparisons.

- AI Automation – Automate repetitive tasks and free up time for strategic growth.

- Advanced Security & AI Governance – Ensure compliance, fraud detection, and secure model deployment.

Want to unlock the power of AI with enterprise-grade security and efficiency? Get Started with Avahi’s AI Platform!